How successful GraphRAG implementation depends primarily on establishing clear human governance — including defined roles, ownership, and review processes — rather than just technical capabilities. The first 90 days are critical for building the team structures and trust practices that determine whether the system becomes reliable business infrastructure or stalls as a proof of concept.

Enterprise AI has a credibility problem. Accuracy, explainability, and trust are often treated as technical defects when they are, at heart, human ones of stewardship and process. The systems work as designed. It is the stewardship around them that decides whether they are usable.

As Andreas Blumauer, Senior VP of Graphwise puts it in an episode of ‘Don’t Panic, It’s Just Data’,

“AI is still a tool for human beings… A lot of people believe you can just substitute human beings as much as possible. This is the misconception.”

Treat that as the baseline. GraphRAG governance is a human discipline first, a data model second.

The teams you assemble and the habits you establish in the first 90 days will decide whether GraphRAG becomes business infrastructure or stalls at proof of concept.

Why the human layer determines GraphRAG success

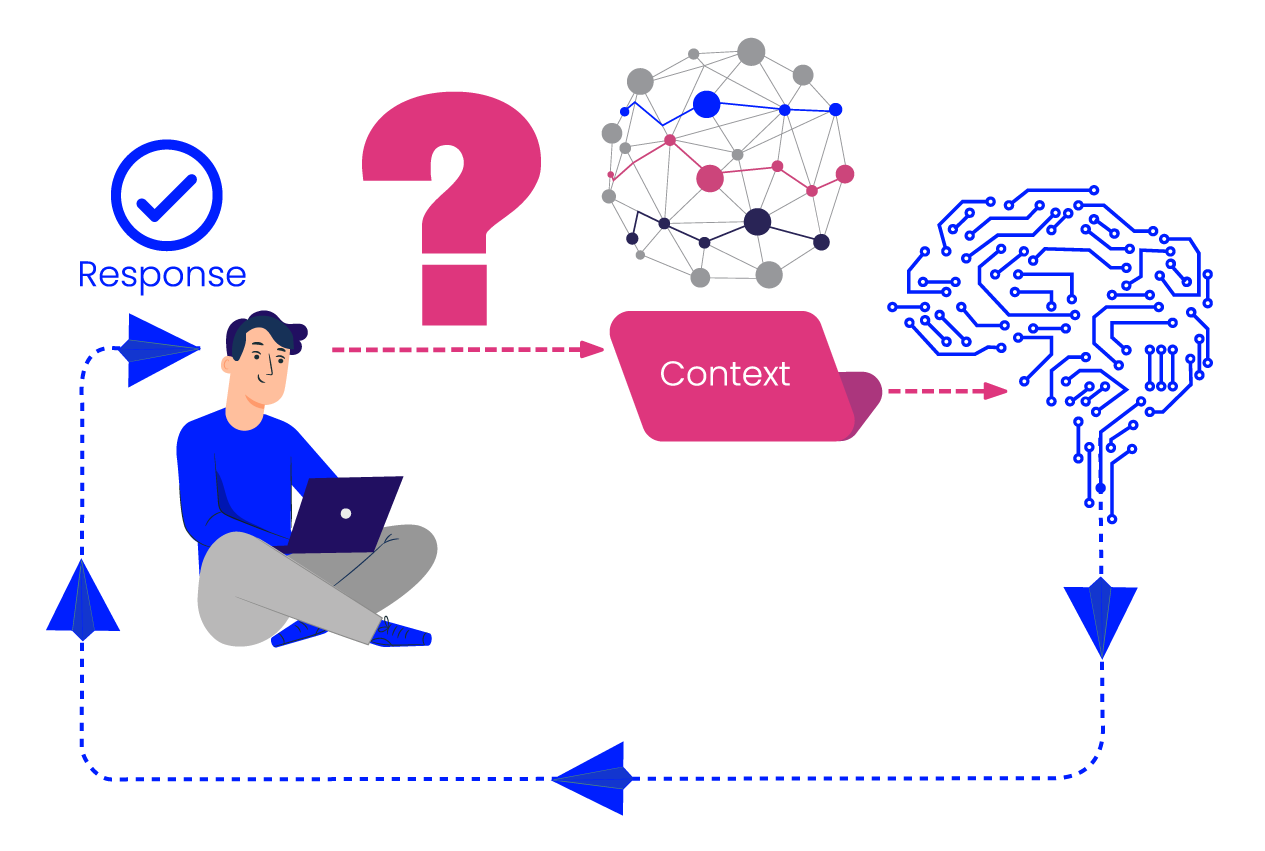

GraphRAG is an innovative approach designed to make Enterprise AI more accurate and reliable. It works by combining Retrieval-Augmented Generation (RAG) with a knowledge graph. Instead of the model retrieving unstructured snippets of text, GraphRAG retrieves facts with their explicit relationships, order, and meaning intact from the structured graph. That structure is what reduces wrong turns and makes answers traceable. The catch is that structure does not appear by itself. Subject matter experts define it. Data stewards maintain it. Engineers implement it. Leaders protect it with clear ownership and cadence.

Projects slip not because the model is weak but because accountability is fuzzy. Without defined ownership for ontology management, no one resolves naming conflicts or schema drift. Without a clear review path, accuracy plateaus. And without a sponsor linking governance to outcomes, the work feels like overhead.

The human layer aligns data, domain, and decision expertise so semantic alignment holds and the system earns trust at scale. That is enterprise AI governance in practice.

Building the team: who owns what in the first 90 days

Your early GraphRAG programme needs a small, committed cast. Keep roles crisp and publish a RACI so decisions move.

Executive sponsor — Responsible: Sets outcome targets, unblocks funding, and secures cross-department buy-in. The sponsor anchors governance to business value so the work survives the first roadblock.

Data steward or ontology lead — Accountable: Owns metadata management, vocabulary control, and mapping between schemas. This role keeps consistency and signs off structural changes to the graph.

Domain expert or SME — Consulted: Validates meaning. Confirms that concepts, synonyms, and relationships reflect how the business actually works. SMEs also review sampled answers for relevance and tone.

AI and data engineering — Responsible: Implements retrieval pipelines, versioning, and evaluation harnesses. Engineers are the primary implementers and reporters, reporting on AI accuracy, latency, and source traceability so leaders can see progress.

Knowledge governance council — Informed and advisory: A lightweight group that meets on a set cadence to review changes, risks, and metrics across functions. The council is a backstop for compliance and long-term standards.

Treat knowledge graph governance as a living practice. Roles will deepen, but clarity at the start prevents stall.

Phase one: foundations that build trust

Days 1 to 30. Aim for setup, not scale. You are building the conditions for reliability.

Run a governance readiness audit

List where metadata lives, how vocabularies are managed, and which teams own the sources. Note silos, duplication, and unapproved variants. Capture current search pain points. This is your baseline for traceability.

Define a core taxonomy

Start narrow. Choose a high-impact slice of content and derive a controlled vocabulary from real queries and real docs. Normalise synonyms. Adopt clear naming rules. Andreas highlights the early value of “annotating documents consistently with a taxonomy.” That consistency is how AI explainability begins.

Document simple standards

Write down how to name entities, tag content, and map fields. Create a one-page checklist that engineers and SMEs can follow without a meeting. Publish it where people actually work.

Set initial KPIs for accuracy and trust

Measure more than hit rate. Track source citation rate, answer completeness, and the percentage of answers that include provenance links. Define acceptance thresholds per use case. Early knowledge graph accuracy is less about perfection and more about confidence signals that help people trust what they see.

Phase two: connect people, then connect data

Days 31 to 60. Bring the right people into the same working rhythm before you widen the data scope.

Run alignment sprints

Pair a data engineer with an SME for focused sessions on one workflow at a time. Review the top tasks users actually perform. Agree on the terms that matter, the relationships that must be preserved, and the exceptions that will cause confusion. Short sessions beat long workshops.

Co-design the ontology

Use collaborative tools so SMEs can see and comment on the structure. Keep the first passes simple. You are aiming for semantic interoperability, not elegance. Publish the ontology in a readable format and include examples of good and bad tagging.

Create review cycles

Set a two-week cadence for taxonomy and ontology review. Each cycle should include a small sample of annotated content, a list of proposed changes, and a decision log. Decisions without records are the fastest way to regress.

Stand up feedback mechanisms

Show progress visually. A basic data-quality dashboard that surfaces missing tags, unmapped fields, and stale entities tells everyone what to fix next. Add an accuracy report that compares system answers against a gold-standard Q&A set. People trust what they can see.

Phase three: operationalising governance

Days 61 to 90. Make governance part of how the organisation works so it scales with new teams and use cases.

Formalise change control

Version the ontology and taxonomies. Record who changed what, why it changed, and when it was approved. Require short impact notes for structural changes so engineers can adjust retrieval and evaluation safely. This is the start of your knowledge lifecycle.

Stand up the knowledge governance council

Keep it small. Give it a clear remit to review metrics, approve higher-risk changes, and escalate cross-team blockers. Publish a one-page charter. The goal is to remove friction, not create ceremony.

Link governance to business KPIs

Tie trust metrics to outcomes leaders care about. Faster query response, lower rework, higher self-service case deflection, fewer duplicate answers, and a rising traceability rate are all signals that the system is paying its way.

Create a trust dashboard

One view that shows data provenance, freshness, coverage of critical vocabularies, and current AI governance status helps non-technical leaders see progress. Include a simple trend on answer precision against your gold standard so improvements are visible.

Keep maturity measurable and clear

Maturity is not the size of the ontology. It is the clarity of ownership, the speed of changes, and the confidence people have in the answers. Add automation carefully. Automate checks that a human has already validated as useful. Governance automation without human intent only hides problems.

Practical tips that save time

Limit the blast radius: Choose one business area for the first 90 days. It keeps decisions grounded in reality and reduces debates about edge cases that do not matter yet.

Write for users, not for the graph: Examples and counter-examples make standards usable. For every rule, include a short snippet that shows what good looks like.

Prefer small, frequent changes: Weekly taxonomy updates beat quarterly overhauls. Small changes maintain momentum and reduce risk.

Build source-of-truth habits: Put standards, vocabularies, and decision logs where people work every day. If tools are fragmented, link them in a single start page.

Measure what helps people trust the system: Track the percentage of answers with citations, the number of times users click through to the source, and the rate of SME acceptance during sampling. These are human trust signals.

Final thoughts: governance is the real engine of trust

The lesson is simple. Accuracy and trust in enterprise AI do not come from bigger models. They come from structure and stewardship. GraphRAG governance earns its keep when teams choose clear ownership, small consistent standards, and regular review over grand designs. That is the difference between a clever demo and a dependable system.

Three points to carry forward. First, the human layer is not optional. SMEs, stewards, engineers, and sponsors bring context that models cannot infer. Second, trust grows where answers are traceable and change is visible. Third, governance is the bridge between data and decision. Get that bridge right and the benefits compound across search, support, and strategy.

Andreas was right to challenge the myth. AI does not replace people. It helps people make sense of complexity. If you want the unvarnished view on why that matters, tune in to the conversation with Andreas Blumauer and EM360Tech on Don’t Panic It’s Just Data and hear the thinking behind the practice.

For more enterprise perspectives on trustworthy AI and the semantic layer, stay close to EM360Tech where we work with leaders who are putting these ideas to work. If GraphRAG is on your roadmap, now is the time to put people at the centre of your plan and let governance do its job.

Do you want to diver deeper into the concept of the semantic layer?