This post explains how semantic layers and GraphRAG provide the essential foundation for building trustworthy AI systems that can accurately understand organizational context and deliver reliable results.

Organizations across industries face a persistent challenge: despite investing heavily in data repositories and content management systems, valuable knowledge remains fragmented across silos. This creates significant barriers when employees need quick and accurate answers to complex questions.

The enthusiasm for large language models (LLMs) is undeniable. But although companies are investing heavily in AI initiatives, early implementations often fall short due to hallucinations, lack of context, and inability to distinguish between critical and supplementary information.

The solution lies in building a solid semantic foundation that can handle the complexity and precision that modern enterprises demand. In other words, what organizations need is combining semantic layers with GraphRAG approaches to preserve structure, provide domain context, and ensure factual accuracy.

The modern knowledge management challenge

More and more, a clear picture is crystallizing around why modern knowledge management systems are becoming central to successful AI deployments. What defines modern knowledge management is the timely provision of contextually relevant, personalized information. This isn’t just about data retrieval. It’s fundamentally about context, personalization, and bringing together data from all types of repositories with different structures and multimodal content.

The core challenge involves building semantic layers on top of existing repositories that can contextualize data in ways that enable proper interpretation of user intent. When questions are asked through customer service portals, the system must understand not just the words being used, but the meaning behind them within the specific organizational context.

Critical to this understanding is the ability to combine factual data regularly found in SQL databases with textual data and narrative content from emails, SharePoint documents, and other unstructured sources. This integration becomes essential because modern users no longer accept imprecise results. The stakes have risen significantly, and organizations that failed to deliver accurate results in their first AI implementations learned this lesson the hard way.

Beyond simple data integration

A semantic layer serves as a unifying framework that integrates an organization’s complete knowledge base—not just isolated structured data or content. It answers complex questions by enriching data with business context through taxonomies and explicitly mapping relationships between data and content systems using ontologies and knowledge graphs..

The power of semantic layers lies in shifting focus from the physical data itself to the descriptive data about the data — the metadata. This creates a fabric, a middle layer that allows organizations to connect data without physically moving it from one system to another. Most organizations house their data, content, and knowledge across 10 or more different systems, and semantic layers provide the logical architecture to connect these pieces using metadata.

From an AI perspective — and here AI should be understood in the broader sense, encompassing not just generative AI and LLMs but also machine learning and other AI components — semantic layers prove invaluable. It’s because AI doesn’t care about the type of data it processes. It needs to aggregate all organizational information to make sense of it, and semantic layers provide that contextual information, logic, and vocabulary meaning that AI systems require.

The enterprise context problem

The fundamental challenge with LLMs is that they are exactly what their name suggests — large language models. They may excel at whatever language they were trained on, but they don’t understand organizational language. Revenue for a coffee company or retailer operates very differently from revenue for a bank, yet LLMs lack a precise contextual understanding.

This is where semantic solutions and semantic web standards become crucial. By providing unique concepts or IRIs — similar to URLs — for terms like “revenue” within specific organizational contexts, semantic layers enable AI systems to understand meaning rather than just words. This specificity transforms how AI interprets and responds to organizational queries.

The maturity spectrum of semantic layer components ranges from basic metadata stores that help understand available data, to controlled vocabularies that establish parent-child relationships, to taxonomies and ontologies that make vocabulary machine-readable across different departments. When marketing and sales have different definitions for “customer,” semantic layers ensure these differences are clearly defined and consistently applied.

The evolution of AI accuracy

Basic RAG implementations using vector databases provide some improvement to the enterprise context problem by pulling in organizational content, but this content often lacks proper vetting. The challenges of “garbage in, garbage out” become apparent when information is duplicated, outdated, or irrelevant, causing AI to provide incorrect answers. Even with clean information, basic RAG systems struggle to determine which content is truly relevant and how to convey answers appropriately based on that content.

This is where knowledge graphs become essential. They provide LLMs with a clear understanding of concepts. To return to the revenue example, knowledge graphs convey what revenue means within specific organizational contexts and identify content related to those concepts.

Think of the difference this way: in basic LLM scenarios, AI is like someone opening a book and reading a paragraph without any context about the book’s title, subject, or purpose. With knowledge graphs, AI has a complete catalog showing where the book belongs, which department created it, and what content it contains.

Types of GraphRAG implementation

Different use cases require different approaches to GraphRAG implementation. Understanding the classification of GraphRAG systems is essential for selecting the right approach for specific use cases.

First of all we have vector RAG, where you extract and summarize information from a few relevant document chunks, but you lose the original structure of the data. So, if you have worked for decades on a well-structured content repository, that’s all going to be lost and will also drive imprecision and hallucinations.

If you use graphs as metadata stores, you have content hubs where all repository content gets tagged consistently using controlled vocabularies and provides connections between concepts. This approach maintains the original content structure while creating content graphs stored in graph databases. In this way you have more precise filtering based on metadata. You also have explainability via references to the relevant documents and concepts.

You can build on this foundation and use the graph as an expert by adding domain knowledge models consisting of taxonomies and ontologies. Here, graphs introduce the background knowledge alongside the existing organizational data. This background knowledge — the contextual understanding needed to properly interpret technical documents or product information — typically exists nowhere in digitized form but proves crucial for sophisticated AI applications.

However, the most comprehensive approach is to use graphs as complete databases. This creates data fabrics consisting of semantic metadata, content graphs, domain knowledge models, and linked factual data, either materialized in graph databases or accessed virtually. This approach truly links different repositories — typically 10 or more — consisting of both structured and unstructured data, enabling “talk-to-your-graph” applications.

Real-world performance

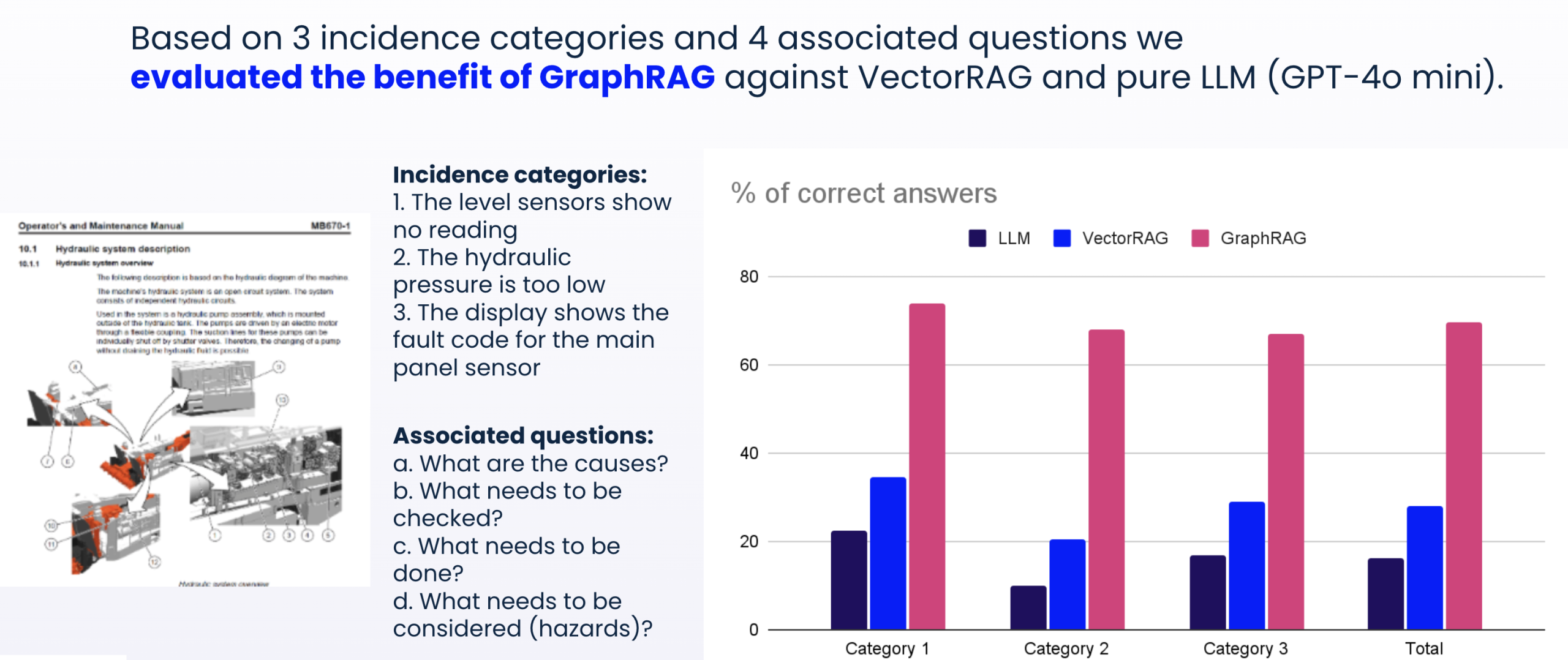

One of our projects is with a Swedish manufacturer of hydraulic systems, demonstrating the practical value of semantic layers. Hydraulic systems are complex machines, and when field engineers encounter problems, they need quick suggestions on how to fix issues. First-time fix rate is an important KPI for these organizations.

When you use a simple RAG approach like Vector RAG, it doesn’t solve the problem effectively. Preserving the document structure is one of the key elements for understanding the meaning of each word more precisely. You have to build a reference model to ensure all concepts, products, and acronyms mentioned in documents are interpreted correctly.

A performance comparison between a Vector RAG and the GraphRAG approaches reveals overwhelming results:

In the graphic above, you see three performance bars: LLM alone, Vector RAG, and GraphRAG. GraphRAG eventually comes to a level of accuracy where field engineers would find helpful and a good place to start.

The accuracy level can be fine-tuned further by adding more knowledge into the domain models, potentially reaching 85-90%. While it’s not 100% — something which rarely can be achieved — it’s definitely a great help for support engineers, and you have it fully under control to bring it up to an even better level.

Building and refining semantic intelligence

Enterprise knowledge graphs extend beyond simple semantic layer approaches as often used in BI systems, when only one structured database gets enriched with additional context information. Such graphs require domain knowledge models consisting of taxonomies and ontologies. Without these, setting up enterprise knowledge graphs efficiently becomes extremely difficult. Tweaking application results isn’t a one-time setup process; it’s iterative, with applications helping identify gaps in graphs and models while improved models generate better answers.

Though domain knowledge models typically represent only about 1% of the entire semantic layer in terms of quantity, their quality determines the quality of the entire enterprise knowledge graph and ensures consistency over time. They also help filter appropriate facts and data from various sources, supporting the retrieval component of RAG architectures since LLMs struggle with result filtering.

Wrapping it up

The implementation of semantic layers with GraphRAG represents a systematic approach to addressing the fundamental limitations of current AI deployments. While the enthusiasm for LLMs continues to drive investment, the combination of semantic layers and knowledge graphs provides the contextual understanding that transforms AI into a reliable business tool. This approach enables organizations to leverage their existing data repositories and content management systems while achieving the accuracy levels necessary for practical business use.

The path to trustworthy AI lies not in abandoning AI initiatives, but in building them on proper semantic foundations. Organizations ready to move beyond basic implementations and build AI systems that truly understand their business context will be able to create sustainable solutions.

Want to learn more about how Semantic Layers and GraphRAG can speed AI readiness?