A Large Language Model (LLM) is a type of deep learning model, typically built on the Transformer architecture, that is trained on massive datasets of text and code to understand, summarize, generate, and predict human language.

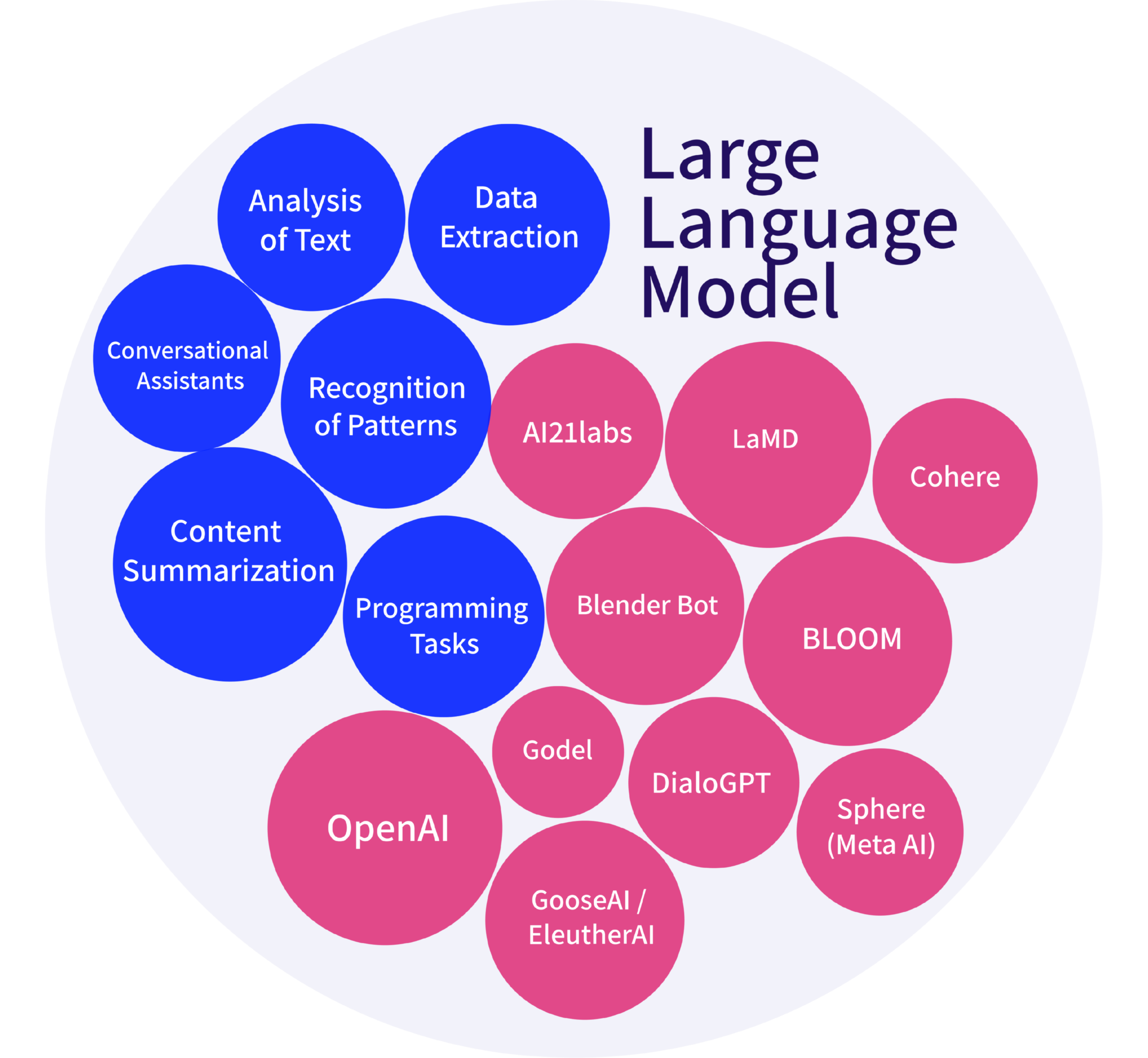

LLMs are deep learning models that learn patterns and relationships from large volumes of textual data. They can be used for generating new text, based on inputs, by predicting the most probable sequence of words to follow. This opens up LLMs to be incorporated into an array of use cases from powering—Chatbots and Virtual Assistants, Summarization and Paraphrasing, Content Generation, Text Classification and Clustering, Sentiment Analysis, Code Generation and debugging, and many more.

The fundamental underlying idea that powers LLMs is to predict the next token with a high degree of precision. This is done using pre-training, fine-tuning, and prompting. Think of it as a smart guessing engine that has been trained on internet-scale data to predict the best possible next word.

Core Terminologies and Concepts in LLMs

- Prompt is how the end user “talks” to LLMs to provide feedback and guidance. It is text describing the intention of the task using natural language.

- Token is a word or a set of words within a sequence of text. As LLMs do not process words directly, they break down text into smaller units (sub-words, characters, or punctuation). Think of tokens as the basic currency of an LLM to process requests as well as the response and the cost of using LLMs with APIs.

- Embeddings is the idea of reducing the dimensionality of the data for efficient computational performance. Think of it as a compressed numerical representation of data. These numerical representations are generated with ML models and can be used to do mathematical operations on the data representation. Embeddings on textual data can be used to identify chunks of text with similar meanings.

- Fine-tuning is the step of making a model specialize in some specific tasks. This is done with plug-in adapters to the base model that allow for supervised training of an already existing model and tuning it for the specific task. It involves taking an LLM and training it on a smaller dataset to adapt it for a specific application or task. Internally, the model’s weights (which is a neural network) are adjusted on the new data. This could be used for custom question answering, custom sentiment analysis, or named entity recognition. The overall goal of fine-tuning is to help with higher-quality results than prompting, with the ability to train on more examples than can typically fit a prompt, which can save token usage and hence reduce cost and latency.

- Retrieval-augmented generation (RAG) is an architectural pattern to harness LLMs on custom data and leverage LLMs for a given domain. It involves chunking the custom data, creating embeddings of the chunks, and storing them in a vector database. The request to the LLM is then vectorized and matched with similar chunks from the database and sent as prompts to the LLM engine for better results.

LLMs Limitations

In spite of all their power, LLMs suffer from limitations that weaken their effectiveness in many enterprise use cases, especially where accuracy and explainability are paramount.

- Hallucinations: LLMs can generate good-sounding but nonsensical answers. They are powerful language predictors, not fact machines. They lack the contextual understanding of cause and effect and also that there is a mismatch between their inherent knowledge and the labeled data on which they were trained. This inconsistency can be problematic in situations where accuracy is extremely critical, especially for data management applications of LLMs.

- Recency problem: The data on which LLMs have been trained has a cut-off date, which means LLMs know nothing about information beyond that date.

- Lack of lineage: LLMs cannot trace the source and origin of the information that was used to generate a given answer. In regulated industries, this lack of explainability is a major compliance risk.

- Inconsistency of generated text: As different executions or prompts will give different answers, it means that the results are non-deterministic and not reproducible.

- Privacy, trust, and compliance issues: these result from the fact that LLMs are trained on publicly available data, which often contains sensitive or private information.

- Losing track of context: LLMs routinely run into challenges when they lose track of context, especially for a longer piece of text or question.

- Bias in the training data: Most LLMs have been trained on data from the Internet the quality of which can be subject to bias. As a result, LLMs fall into the ‘false answer’ trap very easily.

- Susceptibility to prompt injection attacks: Prompts can be easily injected with malicious intent and can be difficult to control.

LLM Use Cases

LLMs can be leveraged to build applications like chatbots, coding assistants, and question-answering systems. They can generate innovative ideas, automate tasks, and analyze large amounts of data. LLMs excel in extracting entities from documents, helping to organize and classify text data, generate summaries, or extract critical thoughts from text. Some of the more widespread uses of LLMs include:

- Inferring sentiments – whether positive, negative, or neutral

- Translating from one language to another

- Generating text to create music, images, and videos

- Drafting articles, creating product descriptions, or generating personalized emails

- Extracting insights from unstructured data (like identifying trends and patterns)

- Summarizing tons of textual data

- Coordinating and orchestrating multiple LLMs as workflows to connect the output of one LLM task as input to another to build end-to-end applications

How to Use LLMs

When thinking about leveraging the capabilities of LLMs, organizations can leverage either proprietary models from OpenAI, Anthropic, and others, or use open-source models. Each of these has its pros and cons. Proprietary models offered as a service can be fine-tuned but have restrictions around usage and modification. They are also easier to start with but can result in vendor lock-in.

Open-source models, on the other hand, can be used for commercial and non-commercial purposes and are typically smaller in size and can be customized. However, they require in-house development efforts and upfront investments.

Choosing what type of model to use depends on multiple factors like costs, latency, quality, privacy, and the amount of customization required.

LLMs and knowledge graphs can integrate and complement each other in multiple ways. Here are some approaches by which Graphwise’s GraphDB can interplay with LLMs:

- Querying OpenAI GPT Models allows developers to send requests to GPT from a SPARQL query. GraphDB users can send data from the graph to GPT for processing, e.g. classification, entity extraction, or summarization;

- ChatGPT Retrieval Connector allows to “index” entities or documents from the graph. Technically a text description is generated for each entity after which vector embeddings are created and stored in a vector database. One can use this to retrieve similar entities. This connector also enables an easy and straightforward implementation of the RAG pattern with data from GraphDB.

- Talk to Your Graph allows GraphDB users to use natural language from GraphDB Workbench to query the graph using the ChatGPT Retrieval Connector.

Conclusion

Leveraging Generative AI capabilities with LLMs is quickly becoming a key competitive differentiator for enterprises. However, this is a fast-evolving space with continuous technological improvements and best practices have not yet been crystallized.

This is also an unregulated space and organizations need to carefully balance LLM’s innovative capabilities with its adoption by assessing the associated risks of data quality, privacy, and trust.

Want to learn how to further enhance you LLMs with Retrieval Augmented Generation for more accurate, contextual question answering?