The topic of interfacing in natural language with knowledge graphs has gained tremendous popularity. Moreover, as per Gartner, this will be a trend that stays and transforms a lot of the computer systems interactions we are used to. The first major step in this direction, seems to be natural language querying (NLQ) – lately everyone seems to want to ask natural language questions on their own data.

Using out-of-the-box large language model (LLM) chatbots for question answering in enterprises can rarely be helpful, as they don’t encode domain specific proprietary knowledge about the organization activities that would actually bring value to a conversational interface for information extraction. This is where the GraphRAG approach enters the scene as an ideal solution to tailor an LLM to meet your specific requirements.

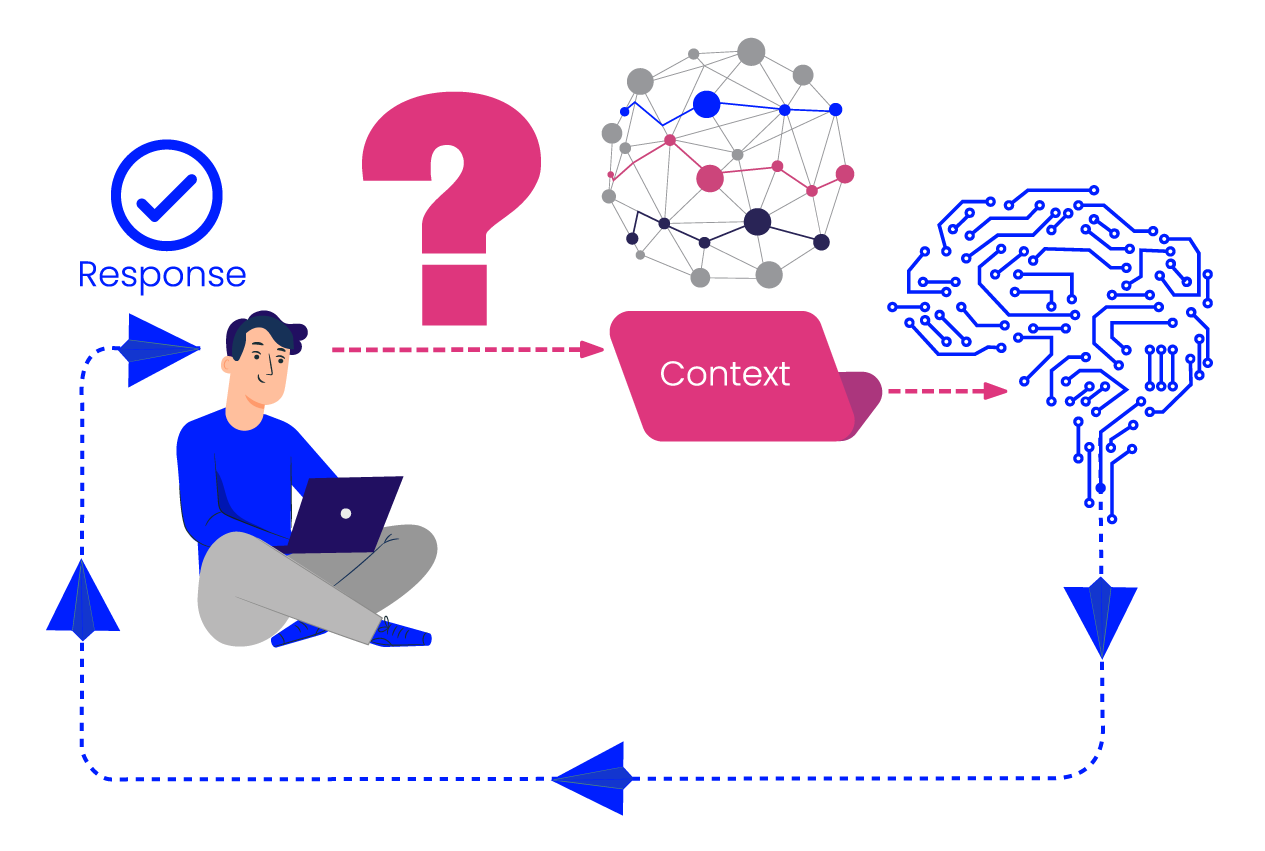

What is RAG?

GraphRAG includes a graph database as a source of the contextual information sent to the LLM. Providing the LLM with textual chunks extracted from larger sized documents can lack the necessary context, factual correctness and language accuracy for the LLM to understand the received chunks in depth. Unlike sending plain text chunks of documents to the LLM, GraphRAG can also provide structured entity information to the LLM combining the entity textual description with its many properties and relationships, thus encouraging deeper insights facilitated by the LLM. With GraphRAG each record in the vector database can have contextually rich representation increasing the understandability of specific terminology, so the LLM can make better sense of specific subject domains. GraphRAG can be combined with the standard RAG approach to get the best of both worlds – the structure and accuracy of the graph representation combined with the vastness of textual content.

This approach is the cheapest and most standard way to enhance LLMs with additional knowledge for the purposes of answering a question. In addition, it is shown to reduce the tendency of LLMs for hallucination, as the generation adheres more to the information from the context, which is generally reliable. Due to this nature of the approach, RAG emerged as the most popular way to augment the output of generative models.

Cost of Implementation (% of finetuning)

Besides question-answering RAG can also be used for many natural language processing tasks, such as information extraction from text, recommendations, sentiment analysis and summarization, to name a few.

How to do RAG?

KNOWLEDGE MODEL: The foundation for a GraphRAG is the taxonomy you use to create a knowledge graph. With PoolParty, you can start building a taxonomy from scratch or use your pre-existing taxonomies. You can also purchase WAND taxonomies or import free publicly-available taxonomies to get started quicker.

KNOWLEDGE BASE: Since the RAG draws from your own document and data repository,, the only thing you need is to tag the items with semantic concepts using the PoolParty Extractor. The tagged knowledge base + the knowledge model culminates in a knowledge graph that is then infused with the LLM.

LLM: So that you feel most secure in the LLM that’s used for this RAG application, you can decide which LLM you want to invest in. There are free LLMs available on the market, more precise paid LLMs, and your own pre-trained LLM if you have one. PoolParty is designed to integrate with the LLM you feel most comfortable with in terms of data security and cost.

Besides question-answering RAG can also be used for many natural language processing tasks, such as information extraction from text, recommendations, sentiment analysis and summarization, to name a few.

The other aspects of the RAG, such as the semantic search and recommender system, all fall into place once these 3 items have been taken care of. Since our architecture relies on a semantic layer, it is easy to build a multi-RAG infrastructure that serves different departments or use cases if that level of specificity is needed.

As simple as the basic implementation is, you need to take into account a list of challenges and considerations to ensure good quality of the results:

- Data quality and relevance is crucial for the effectiveness of GraphRAG, so questions such as how to fetch the most relevant content to send the LLM and how much content to send it should be considered.

- Handling dynamic knowledge is usually difficult as one needs to constantly update the vector index with new data. Depending on the size of the data this can impose further challenges such as efficiency and scalability of the system.

- Transparency of the generated results is important to make the system trustworthy and usable. There are techniques for prompt engineering that can be used to stimulate the LLM to explain the source of the information included in the answer.

The Different Varieties of GraphRAG

We can summarize several varieties of GraphRAG, depending on the nature of the questions, the domain and information in the knowledge graph at hand

GraphRAG Varieties

Vanilla Chunky Vector RAG

Type 1: Graph as a Metadata Store

Type 2: Graph as an Expert

Type 3: Talk to your Graph

Semantic Metadata

PREMIUM

Domain Knowledge

ENTERPRISE

Factual Data

ENTERPRISE

Type of Graph Needed

Content Hub

Domain Knowledge Model

Data Fabric

ENTERPRISE

The Result

Extract and summarize information from a few relevant document chunks, loss of original structure

Precise filtering based on metadata. Explainability via references to the relevant documents and concepts

Get richer results! Domain knowledge helps retrieval of relevant documents that a vanilla vector DB would miss

Extract relevant factual information. Get accuracy and analytical depth from structured queries via chat

Besides the Standard RAG, hwich is good for minor knowledge extraction and summarisation wen have the following graph enriched RAG models.

- Graph as a Content Hub: Extract relevant chunks of documents and ask the LLM to answer using them. This variety requires a KG containing relevant textual content and metadata about it as well as integration with a vector database.

- Graph as а Subject Matter Expert: Extract descriptions of concepts and entities relevant to the natural language (NL) question and pass those to the LLM as additional “semantic context”. The description should ideally include relationships between the concepts. This variety requires a KG with a comprehensive conceptual model, including relevant ontologies, taxonomies or other entity descriptions. The implementation requires entity linking or another mechanism for the identification of concepts relevant to the question.

- Graph as a Database: Map (part of) the NL question to a graph query, execute the query and ask the LLM to summarize the results. This variety requires a graph that holds relevant factual information. The implementation of such a pattern requires some sort of NL-to-Graph-query tool and entity linking.

How Graphwise makes GraphRAG easier

Graphwise GraphDB provides numerous integrations which enable users to create their own GraphRAG implementation quickly and efficiently. GraphDB’s Similarity plugin is an easy tool to create an embedding index of your content for free and use SPARQL to query this index for the top K entities or pieces of content closest to the user question.

For more complex use cases, which require higher precision of the results, GraphDB also provides the ChatGPT Retrieval Plugin Connector through which you can index your content in a vector database using a state-of-the-art embeddings generation model and run powerful queries against this vector database. Moreover, the plugin takes care to continuously sync the state of the knowledge in GraphDB with the vector database in a transactionally-safe manner, which means new data will be immediately available for an LLM integration.

The ChatGPT Retrieval Plugin Connector, similarly to other GraphDB plugins, allows you to precisely configure what data you want to pull from your knowledge graph and store as embeddings in an external vector database. It’s not limited to textual fields, it can also convert structured data about RDF entities into text embeddings. The connection to the vector database is managed by the ChatGPT Retrieval Plugin – hence the name of the connector. This is an open-source tool, developed and maintained by OpenAI under an MIT license. Its function is to index and query content. To index content, the plugin receives a piece of text, splits it down into manageable parts or “chunks”, creates vector embeddings for each chunk using OpenAI’s embedding model, and stores these embeddings in a vector database. The plugin is compatible with numerous databases, including some of the most popular ones in the last few years, such as Weaviate, Pinecone and Elasticsearch.

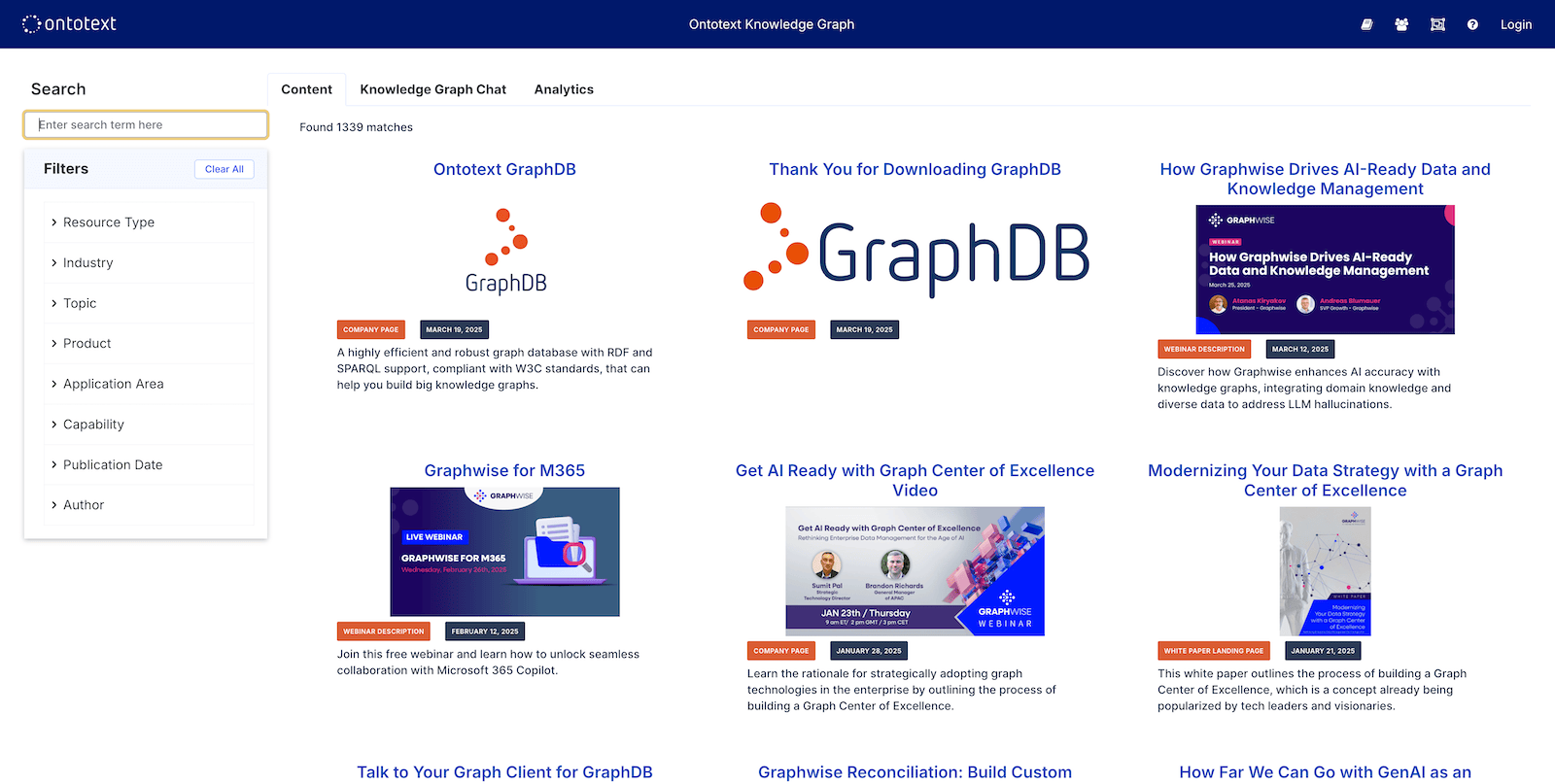

Online Demos powered by Graphwise

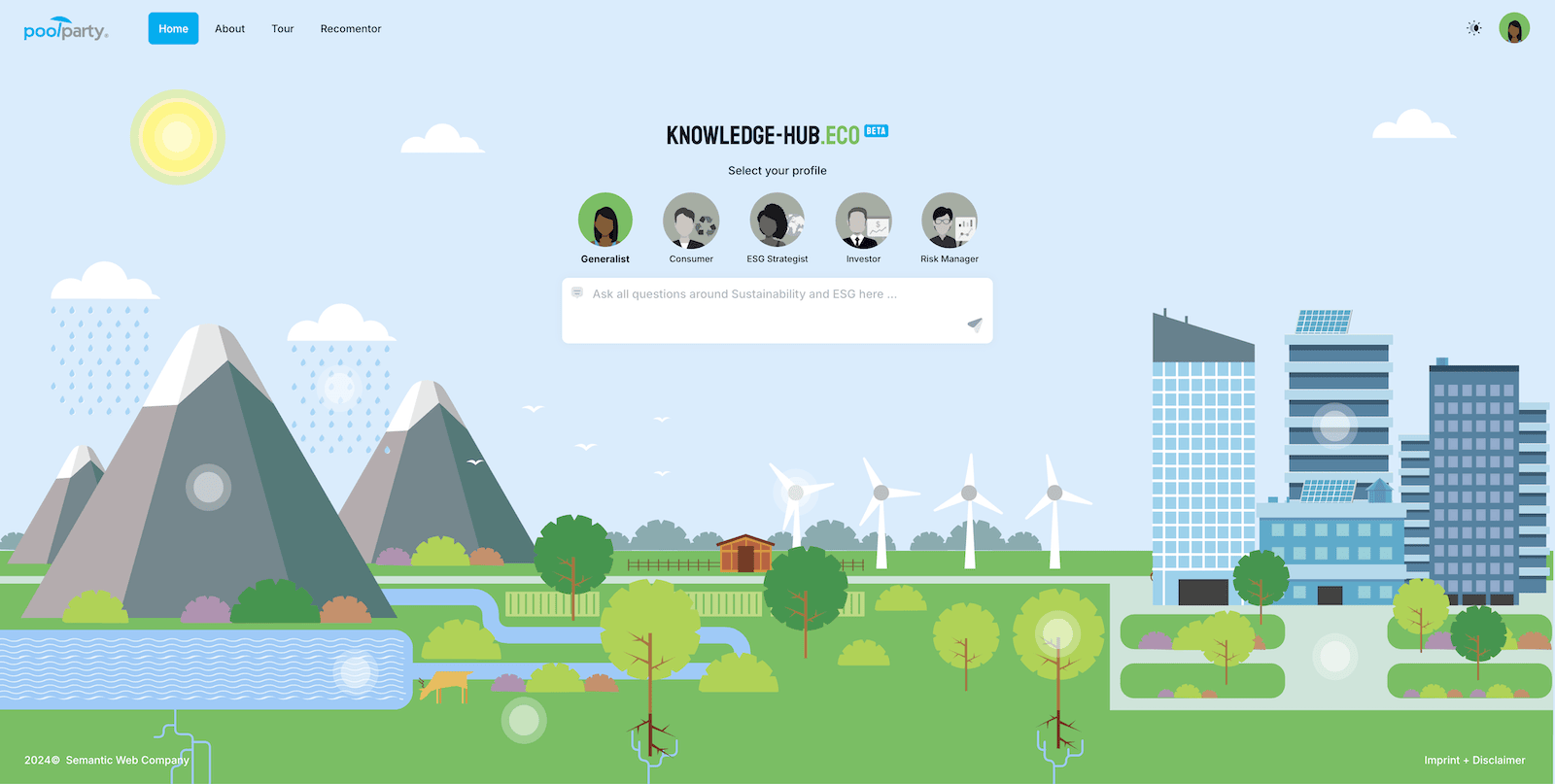

Knowledge Hub

Gather knowledge on sustainability and ESG issues. GraphRAG provides accurate and personalised answers and recommendations.

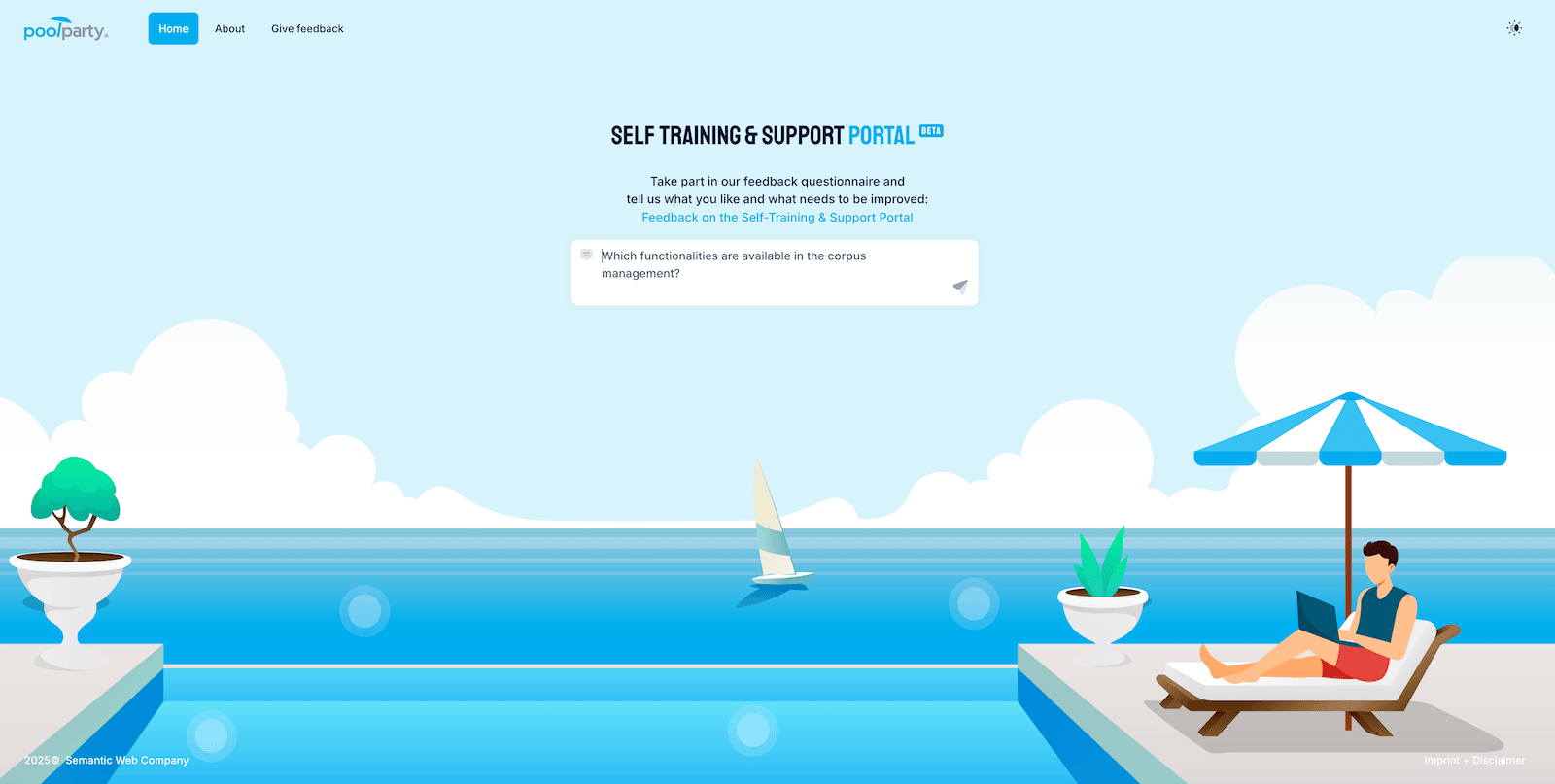

Intelligent Support Desk

Learn about a software suite, find answers quickly and test your knowledge of a software platform. Benefit from intelligent wizards based on GraphRAG.

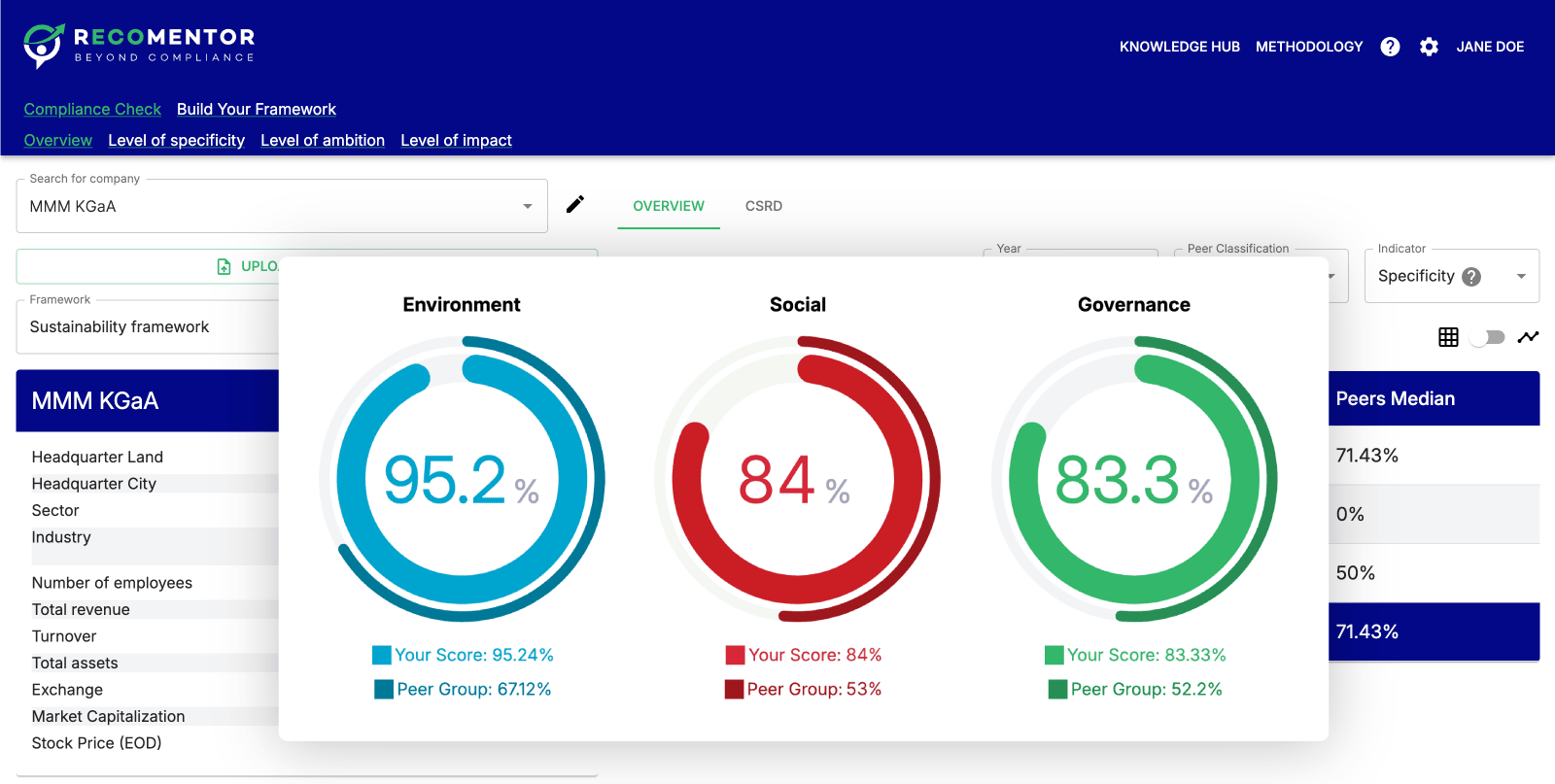

Compliance Checks

Meet sustainability criteria and align with regulatory requirements. Benefit from automatic compliance checks and comparisons based on GraphRAG.

Connected Content

Use a knowledge graph and GraphRAG to find marketing materials and ask questions about them. Benefit from efficient search and accurate results.

GraphDB interacts with the plugin’s REST interface, making it decoupled from the embeddings model or vector database. Graphwise has also developed an alternative to OpenAI’s solution, which allows a custom embeddings model to be plugged in, including the Llama and Hugging Face models. The ChatGPT Retrieval Plugin also provides a REST interface for querying the vector database given a query text, which is available in GraphDB through SPARQL. If you prefer to not use a formal query language such as SPARQL for this, GraphDB supports an out-of-the-box interface to converse with your own RDF data, on top of a ChatGPT Retrieval Plugin Connector, called Talk to Your Graph. Follow the GraphDB documentation of Talk to Your Graph for instructions on how to set it up.

Conclusion

In conclusion, the GraphRAG approach represents a significant advancement in the enrichment of LLMs. By effectively combining the strengths of both retrieval-based and generative approaches, GraphRAG enhances the ability of LLMs to produce more accurate, relevant, and contextually informed responses. This technique not only improves the overall quality of outputs but also expands the capabilities of LLMs in handling complex and nuanced queries. As a result, GraphRAG opens up new possibilities in various applications, from advanced chatbots to sophisticated data analysis tools, making it a pivotal development in the field of natural language processing.