The (Large language models) LLM and GenAI hype has reached a peak with AI washing everywhere. This raises serious questions about whether organizations are chasing fool’s gold with unrealistic expectations to solve complex problems overnight. Most enterprises fail to realize the extensive work required to deploy GenAI into production. Business leaders are realizing the need for higher success rates rather than more AI initiatives, with focus on use cases, domain context, and ROI instead of chasing latest models.

The AI implementation gap

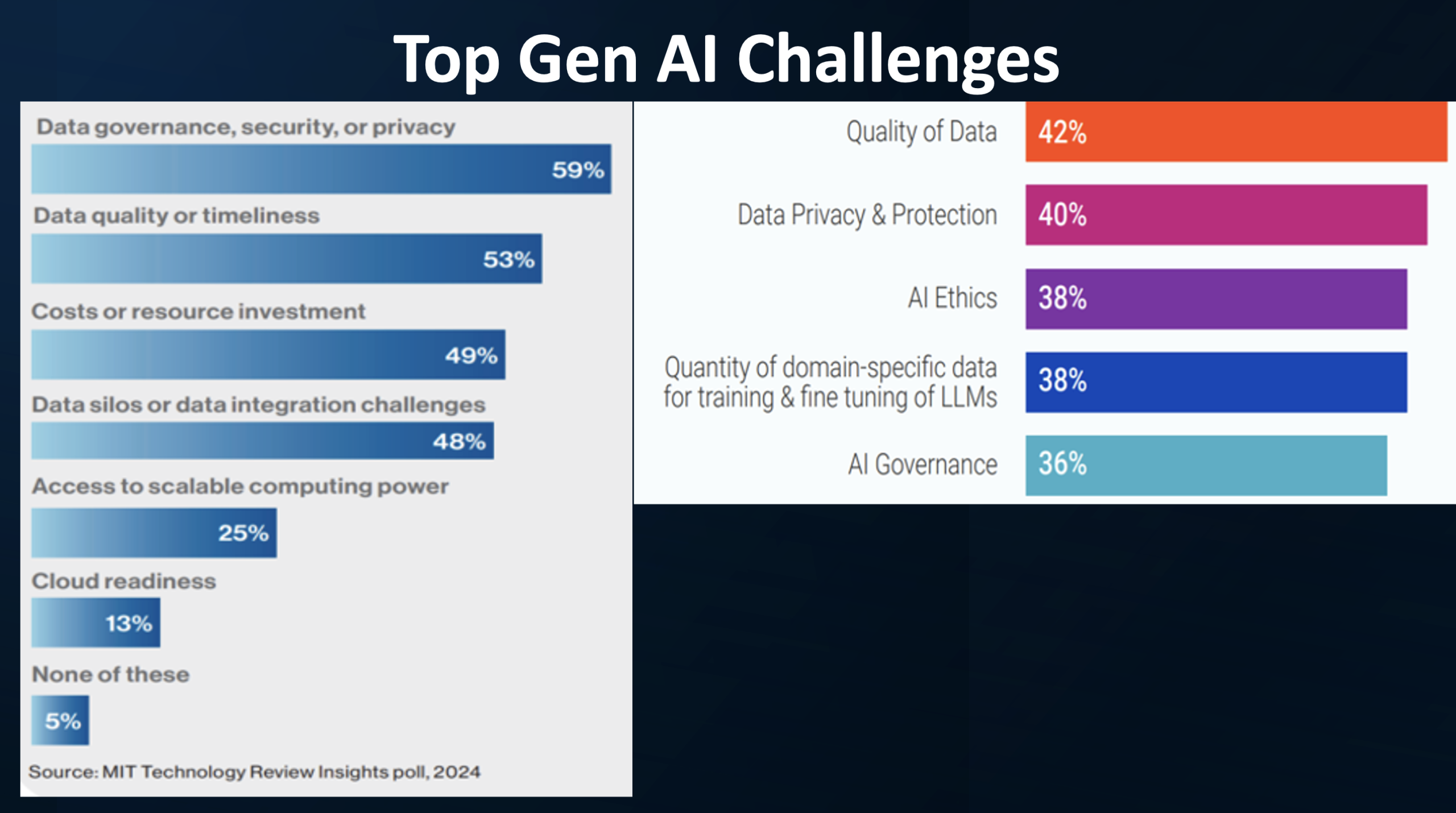

Many prospects outline the pressing need for LLM integration but are often unclear about business goals, reflecting requests driven by excitement rather than actual business needs. This is also confirmed across surveys highlighting top GenAI challenges.

Credit: CDO Trends

In the chase to realize their AI dreams, most organizations are struggling to semantically integrate data across data and metadata silos and are found lacking high quality data with proper processes. Years of neglecting fundamental practices have created this massive data debt.

Data findability is a major challenge, with research showing that employees spend 30% of their time searching for relevant data. Enterprises have created a tangled mess of data copies and integrations that costs 40-60% of their annual technology budget (what we call the “bad data tax“). Yet this massive spending rarely translates to key insights or better business decisions.

What is ailing data management?

The missing piece in most data management strategies is the semantic layer. Data without context is useless and often a liability. This makes semantics, not technology, the core component for success in GenAI initiatives. Organizations need to treat business context and semantics as priorities rather than procrastinating and thinking of the semantic layer as “semantic later”. Some of Graphwise’s global customers have eventually recognized the critical need for semantics to make their data management strategies effective and efficient, after unsuccessfully trying multiple mainstream solutions.

The shift from being data-driven or AI-driven to becoming knowledge-driven, requires organizations to build context around data, content, users, and processes. This is best done by collecting metadata signals throughout dataflows, user journeys, and workflows. Additionally, operational data needs to be semantically enriched with domain and industry specific ontologies and taxonomies.

Successful GenAI implementations are fueled with necessary domain knowledge and context, and knowledge graphs provide the ideal platform to connect disparate data across systems semantically. This boosts GenAI accuracy and relevancy with metadata, context, and semantics. RDF-based knowledge graphs offer tools to describe context, entities, relationships, and hierarchies, enabling reasoning and inferencing of newer facts from existing data.

A semantic layer built with knowledge graphs

Organizations have always struggled with siloed applications lacking standardization, security, and consistent schemas. This necessitates a shift from application-centric to a data-centric approach. Knowledge graphs provide the foundation for data quality, interoperability, and reusability by storing data once in a machine-readable format. This facilitates enterprises to retain original context and semantics for building downstream data products, rather than repeatedly transforming data for each application.

There are three ways in which a semantic layer built with knowledge graphs increases the inherent value of data:

- Enables faster data integration by mapping data to shared semantic models

- Improves data quality as it captures contextual relationships through ontologies and vocabularies, taxonomies, inferred relationships

- Builds trust with shared models that is backed with validated and consistent data, entity resolution, data lineage and provenance

A Graph Center of Excellence

Knowledge graphs have been the industry disruptor and a key driver for innovation across a lot of enterprises. A Graph Center of Excellence (Graph CoE) is a strategic initiative that addresses technology fragmentation by semantically connecting siloed data across applications with shared meaning, serving as a hub for data management processes within an organization.

A Graph CoE identifies and prioritizes key use cases, pain points, desirable ROI and business outcomes. It requires C-Suite sponsorship to establish graph technology as a strategic initiative. Most importantly, it builds a foundational semantic layer to ensure data reusability, shareability, and trustworthiness across the organization. It requires skilled data and knowledge engineers to build and deploy use cases, leveraging semantics with knowledge graphs.

Key Graph CoE elements include defining a strategy for leveraging knowledge graphs across the organization, outlining underlying business drivers like cost containment, process automation, regulatory compliance, and governance. It also involves establishing data policies and standards to ensure the semantic layer is built with engineering principles that prioritize interoperability and reusability.

From ETL to ECL

Data processing is shifting from structured integration to unstructured data, driven by AI. Unstructured data requires semantics and context for machines to understand connections. As unstructured data rapidly grows, organizations need to adopt the new paradigm of ECL (extract, contextualize, load) instead of traditional ETL (extract, transform, load). ECL facilitates semantic ingestion and management of connected data across processes and workflows. Without semantically integrating data, organizations stand to lose on reusability and shared meaning and will end up spending a lot of resources resolving ambiguities across inconsistent data.

Adopting an ECL based approach to data processing, opens new frontiers for knowledge discovery and interaction, enabling real-time contextual optimizations and personalized data conversations. By conversing with data that is semantically enriched, organizations enhance decision-making and innovation. Knowledge models with contextual information improve user intent interpretation and response accuracy. This enhances retrieval of relevant information, optimizes queries for semantic matching, and incorporates expert rules for effective chunking. This facilitates personalized responses according to roles and tasks, ensuring contextually accurate and individually tailored interactions.

Solving data quality at the source

Data quality, often overlooked, is another major obstacle for organizations, contributing significantly to data debt. It’s more cost-effective to ensure data quality before feeding it into AI systems as low-quality data leads to poor outcomes, creating a negative feedback loop. High-quality, trusted data is crucial for data-driven enterprises, especially since unstructured data often contains contradictions and inconsistencies. This is evident in chatbots that provide factually wrong, incoherent, or out of context responses during extended conversations.

RDF-based knowledge graphs offer significant data quality advantages. One can declaratively specify data quality rules using SHACL, enabling not only basic data validation but also conceptual and semantic validation. This ensures data consistency that supports reasoning, and prevents duplicates through mechanisms like URIs.

Going beyond algorithms

AI profoundly impacts all organizational roles, making AI literacy essential. Everyone needs to understand data’s source, flow, trustworthiness, and underlying rules and policies. AI literacy extends beyond basic data literacy. It requires understanding AI’s influence when consuming data (for example, detecting AI-generated content) and effectively leveraging AI algorithms when creating data. Without data literacy, AI yields no ROI. As AI transforms the workplace, AI literacy, and knowledge graphs will become crucial.

Deep learning doesn’t provide human understandable insights on how a specific result was achieved. Explainability is a crucial factor, especially across legal and finance domains. Knowledge graphs capture entities, attributes, and relationships with associated semantics and context thereby enhancing data interpretability and explainability. This makes contextual AI increasingly possible, as demonstrated by systems using AI and knowledge graphs that can show the provenance of answers.

To wrap it up

To thrive in the age of AI, organizations need a shift to connected, contextualized data that is easily integrated, high-quality, trustworthy, and traceable. AI greatly benefits from semantically enriched data with ontologies and taxonomies. It is imperative for organizations to invest in semantics via knowledge graphs to reap the promised benefits of GenAI.

Research indicates knowledge graphs improve LLM accuracy. With data-related costs consuming 40-60% of IT budgets (bad data tax), adopting a context-driven, knowledge-driven approach to data management with a foundational semantic layer with a Graph CoE presents a compelling business case.