This article shares insights from Enterprise Knowledge’s collaborative experience with GraphDB and its Talk to Your Graph interface, provided by Graphwise.

LLM-powered applications unlock untapped potential in leveraging unstructured data for business innovation, thanks to their natural language fluency and cross-domain versatility. When deployed effectively, these models can accelerate the discovery of critical insights, automate complex information retrieval processes, and perform both analytical and synthetic operations on diverse datasets tailored to specific business needs.

One major challenge in leveraging LLMs for enterprise applications is the difficulty of dynamically editing and maintaining knowledge-based models. Unlike traditional rule-based systems, LLMs do not natively support structured updates or explicit reasoning over evolving knowledge. This makes it difficult to correct inaccuracies, refine domain-specific understanding, or incorporate new information without retraining or complex prompting strategies.

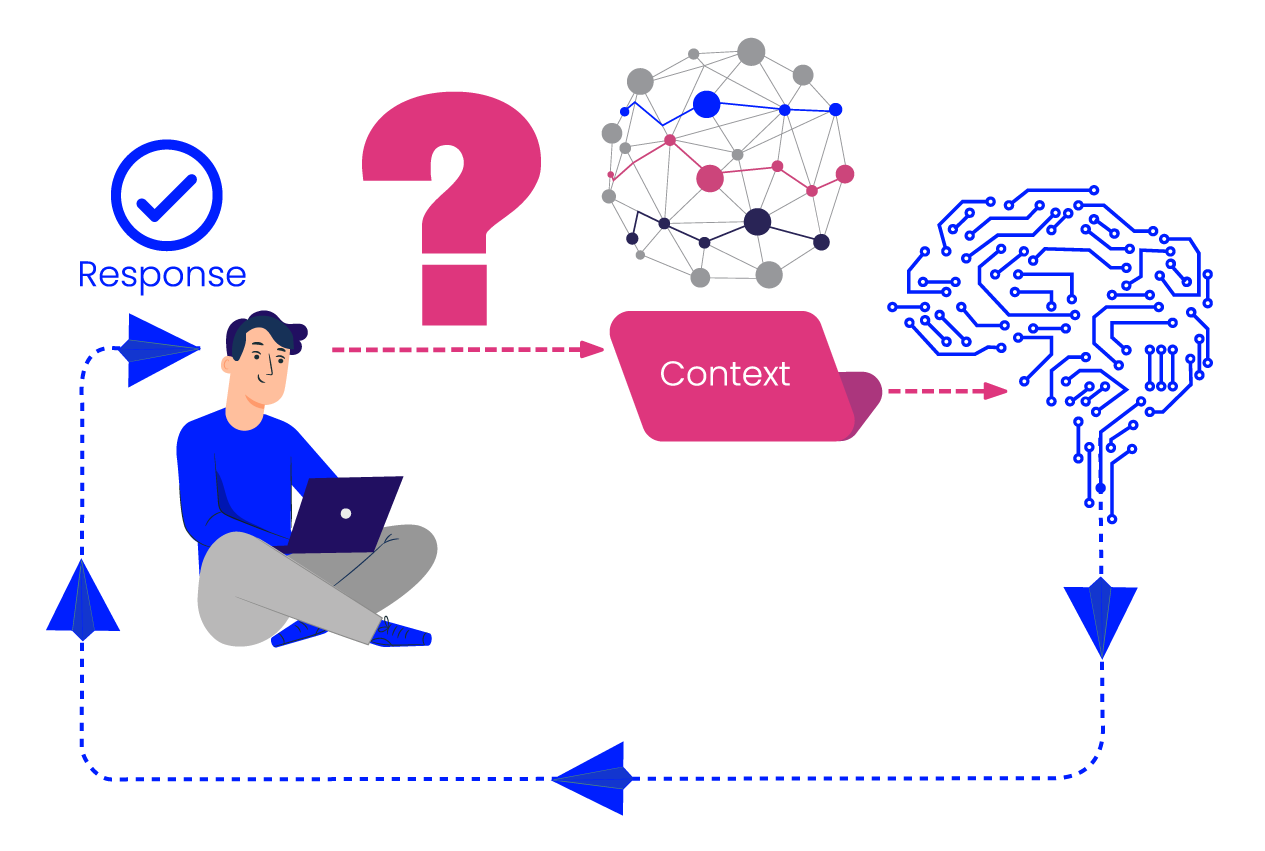

When an LLM is called to perform a task, it has no inherent awareness of why it has been invoked or the context in which it operates. To address this challenge, various methods have been developed, including prompt engineering, vector retrieval-augmented generation (Vector RAG), and graph-based retrieval-augmented generation (Graph RAG). RAG, or retrieval-augmented generation, is an approach that enhances LLM responses by incorporating external knowledge sources at query time, allowing the model to retrieve and ground its outputs in relevant, up-to-date information.

Strengths and limitations of different approaches

Each method has a set of strengths and weaknesses that organizations must consider when developing their AI strategy.

Prompt Engineering

- Strengths: Provides precise control over the information presented to the LLM, maintains a consistent input structure through prompt templating, and is easy to implement and refine.

- Weaknesses: Lacks generalizability across different use cases, often requires trial and error to optimize prompts, and does not inherently solve the problem of retrieving relevant data.

Vector RAG

- Strengths: Scales efficiently for large document collections, excels at retrieving semantically relevant content, and is straightforward to implement.

- Weaknesses: Struggles to capture meaningful relationships between entities or structural elements of queries, relies on model tuning for optimal performance, can be sensitive to text volume — performing poorly when processing too much or too little content at once, and presents challenges in handling change management, as updates to domain knowledge or data sources require frequent re-indexing and retraining to maintain relevance.

Graph RAG

- Strengths: Leverages structured relationships to enhance query relevance, embeds semantic context into responses through graph-based models, and supports diverse query types, including retrieval and aggregation. By incorporating a graph-based knowledge model, it captures and applies domain expertise, enabling more precise, contextually aware responses that reflect real-world relationships and hierarchies.

- Weaknesses: Requires a graph infrastructure, the development of which is a strategic decision that entails building both the necessary infrastructure and skills.

Each of these methods compensates for the limitations of the others. Vector RAG excels at retrieving the most relevant direct text for LLMs but lacks a broader domain perspective. Graph RAG structures information according to domain-specific relationships, enabling more contextually aware responses. Prompt engineering provides explicit instructions to LLMs, guiding them in understanding and executing tasks effectively.

By integrating these approaches, organizations can build a more flexible and powerful system that enables LLMs to process unstructured information with greater clarity, accuracy, and contextual awareness.

A Novel Solution: Graphwise’s Talk To Your Graph Tool

To address the limitations of these approaches, Graphwise has developed Talk To Your Graph (TTYG) — a retrieval and synthesis framework providing ready-made access to a GraphRAG interface over your data. This system enables an LLM to receive information from GraphDB, enriching responses with semantically structured information. By integrating GraphDB with OpenAI’s Assistant API, TTYG decreases the time and effort required to start utilizing GraphRAG, enabling businesses to quickly realize the value of semantically assisted information retrieval in AI use cases.

The process begins with a message thread. A single TTYG instance can have multiple message threads. This can affect the type of responses LLMs give as more information is processed in a single thread. When a message is sent, the LLM determines whether to respond directly to the question or retrieve information from the graph. If information needs to be retrieved from the graph, the LLM writes the relevant SPARQL query. It returns the result to a further LLM call, which receives the output data to consider in its answer to your question.

In addition to SPARQL query retrieval, the TTYG system is able to retrieve data based on semantic similarity search. This process uses a semantic embedding of the graph to represent nodes in a semantic space. Then it allows nodes to be retrieved based on their position in this space as well as their location in the network. Retrieval can be repeated many times in a single message thread, allowing you to build up context about your problem with the LLM.

Currently, TTYG is set up as a tool-use assistant. This means that it is focused on the problem of retrieving information from GraphDB. It is not set up to create longer agent chains, meaning TTYG is well-positioned to be integrated into broader LLM systems as well as serve a single purpose use case. Users can even define custom tools by modifying GraphDB’s interaction with the OpenAI Assistant API. However, this is an involved process where you may experience the best result by working with Graphwise or GraphDB integrators.

How Can GraphRAG Help Your Business?

By adopting a system like TTYG, businesses can dramatically enhance their ability to unlock actionable insights and improve decision-making. TTYG integrates conversational AI with structured knowledge graphs, allowing employees and stakeholders to efficiently access accurate, timely, and reliable information through natural-language interactions without needing specialized technical skills. This leads to faster query resolution, reduced operational bottlenecks, and improved user satisfaction, driving productivity and cost savings across the organization.

Additionally, since answers are based directly on authoritative knowledge graphs, businesses significantly reduce misinformation or hallucination risks. In this way, they achieve greater trust and confidence in the information disseminated internally and externally. Ultimately, these capabilities empower enterprises to leverage their investments in knowledge graph technology effectively, offering them competitive advantages through improved responsiveness, informed decision-making, and increased agility.

We tested the latest TTYG framework to back our internal chat platform, ChatEK, and are delighted to share the results. We loaded a knowledge graph into GraphDB with employee information and set up a TTYG agent, enabling the following retrieval methods:

- SPARQL: allowing sub-queries to be translated into SPARQL queries. This method overcomes one of the main problems of Vector RAG, mentioned above – its inability to answer questions that require analysis of more than several chunks to be answered.

- FTS: full-text search across the graph, which can answer open questions that cannot be translated to a SPARQL query.

- Similarity search: using the Semantic Similarity indices, mentioned above, to fetch relevant entities – often needed to provide parameters for the SPARQL queries in the first method.

We also recorded a short video where one can see more details about the technical setup and see the live demonstration.

Why Adopt Graphwise’s TTYG?

Choosing TTYG for interacting with your graph database provides powerful advantages for managing and analyzing graph data. Its robust capabilities make it an ideal choice for organizations seeking a straightforward and powerful solution for quality AI systems.

Key benefits include:

- Accelerated Information Discovery: Employees and customers can effortlessly access complex data through natural-language questions, reducing the time required to find critical business information or insights.

- Reduced Enterprise Costs: Utilizing TTYG reduces the involvement required from specialized technical personnel to realize value from a graph database. TTYG can also serve effectively in the role of retrieving relevant information from GraphDB for broader AI systems, reducing the amount of custom development that may be required for creating AI solutions.

- Increased Accuracy & Trust: GraphRAG mitigates a key problem when working with LLMs: hallucinations. Retrieving factual information from a graph database ensures LLMs have access to thorough, up-to-date contextual information that surrounds user queries. This ensures an AI system can provide more accurate answers, thereby increasing trust with employees and customers.

Before TTYG, these advantages were hidden behind a wall of technical complexity having to do with graph data as well as effective RAG. Graphwise has made the problem much simpler, allowing you to simply Talk To Your Graph.

Conclusion

By combining the contextual depth of knowledge graphs with the fluency of LLMs, TTYG empowers organizations to extract more value from their data — without the steep learning curve or technical overhead traditionally associated with graph-based systems.

Rather than forcing businesses to choose between ease of use and data fidelity, TTYG offers both. It streamlines access to authoritative knowledge, reduces hallucinations, and simplifies complex information retrieval — all through a natural language interface. Whether as a standalone tool or integrated into a larger AI ecosystem, TTYG is a powerful step forward in making intelligent, reliable, and scalable AI systems a practical reality for modern enterprises.

onerror="this.style.display='none'" />

onerror="this.style.display='none'" />